Documentation/9p root fs

9P as root filesystem (Howto)

It is possible to run a whole virtualized guest system entirely on top of QEMU's 9p pass-through filesystem (Documentation/9psetup) such that all guest system's files are directly visible inside a subdirectory on the host system and therefore directly accessible by both sides.

This howto shows a way to install and setup Debian 11 "Bullseye" as guest system as an example with 9p being guest's root filesystem.

Roughly summarized we are first booting a Debian Live CD with QEMU and then using the debootstrap tool to install a standard basic Debian system into a manually mounted 9p directory. The same approach can be used almost identical for many other distributions, e.g for related .deb package based distros like Ubuntu you probably just need to adjust the debootstrap command with a different URL as argument.

Motivation

There are several advantages to run a guest OS entirely on top of 9pfs:

- Transparency and Shared File Access: The classical way to deploy a virtualized OS (a.k.a. "guest") on a physical machine (a.k.a. "host") is to create a virtual block device (i.e. one huge file on host's filesystem) and leave it to the guest OS to format and maintain a filesystem ontop of that virtualized block device. As that filesystem would be managed by the guest OS, shared file access by host and guest simultaniously is usually cumbersome and problematic, if not even dangerous. A 9p passthrough-filesystem instead allows convenient file access by both host and guest simultaniously as the filesystem is just a regular subdirectory somewhere inside host's own filesystem.

- Partitioning of Guest's Filesystem: in early UNIX days it was common to subdivide a machine's filesystem into several subdirectories by creating multiple partitions on the hard disk(s) and mounting those partitions to common points of the system's abstract file system tree. Later this became less common as one had to decide upfront at installation how large those individual partitions shall be, and resizing the partitions later on was often considered to be not worth the hassle (e.g. due to system down time, admin work time, potential issues). With modern hybrid filesystems like btrfs and ZFS however, subdividing a filesystem tree into multiple, separate parts sees a revival as subdivision into their "data sets" (equivalent to classical hard disk "partitions") comes with almost zero cost now as those "data sets" acquire and release individual data blocks from a shared pool on-demand, so they don't require any size decisions upfront, nor any resizing later on. If we would deploy filesystems like btrfs or zfs on guest side however ontop of a virtualized block device, we would defeat many of those filesystem's advantages. Instead if the filesystem is deployed solely on host side by using 9p, we preserve their advantages and allow a much more convenient and powerful way to manage any of their filesystem aspects as the guest OS is running completely independent and without knowledge what filesystem it is actually running on.

- (Partial) Live Rollback: As the filesystem is on host side, we can snapshot and rollback the filesystem from host side while guest is still running. By using "data sets" (as described above) we can even rollback only certain part(s) of guest's filesystem, e.g. rolling back a software installation while preserving user data, or the other way around.

- Deduplication: with either ZFS or (even better) btrfs on host we can reduce the overall storage size and therefore storage costs for deploying a large amount of virtual machines (VMs), as both filesystems support data deduplication. In practice VMs usually share a significant amount of identical data as VMs often use identical operating systems, so they typically have identical versions of applications, libraries, and so forth. Both ZFS and btrfs allow to automatically detect and unify identical blocks and thefore reduce enormous storage space that would otherwise be wasted with a large amount of VMs.

Let's start the Installation

In this entire howto we are running QEMU always as regular user. You don't need to run QEMU with root privileges (on host) for anything in this article, and for production system's it is in general discouraged to run QEMU as user root.

First we create an empty directory where we want to install the guest system to, for instance somewhere in your (regular) user's home directory on host.

mkdir -p ~/vm/bullseye

At this point, if you are using a filesystem on host like btrfs or ZFS, you now might want to create the individual filesystem data sets and create the respective (yet empty) subdirectories below ~/vm/bullseye (for instance home/, var/, var/log/, root/, etc.), this is optional though. We are not describing how to configure those filesystems in this howto, but we will outline noteworthy aspects during the process if required.

Next we download the latest Debian Live CD image. Before blindly pasting the following command, you probably want to check this URL whether there is a younger version of the live CD image available (likely).

cd ~/vm wget https://cdimage.debian.org/debian-cd/current-live/amd64/iso-hybrid/debian-live-11.2.0-amd64-standard.iso

Boot the Debian Live CD image and make our target installation directory ~/vm/bullsey/ on host available to the VM via 9p.

/usr/bin/qemu-system-x86_64 \ -machine pc,accel=kvm,usb=off,dump-guest-core=off -m 2048 \ -smp 4,sockets=4,cores=1,threads=1 -rtc base=utc \ -boot d -cdrom ~/vm/debian-live-11.2.0-amd64-standard.iso \ -fsdev local,security_model=mapped,id=fsdev-fs0,multidevs=remap,path=$HOME/vm/bullseye/ \ -device virtio-9p-pci,id=fs0,fsdev=fsdev-fs0,mount_tag=fs0

You should now see the following message:

VNC server running on ::1:5900

If the machine where you are running QEMU on (i.e. where you are currently installing to), and the machine from where you are currently typing the commands are not the same, then you need to establish a SSH tunnel to make the remote machine's VNC port available on your workstation.

ssh user@machine -L 5900:127.0.0.1:5900

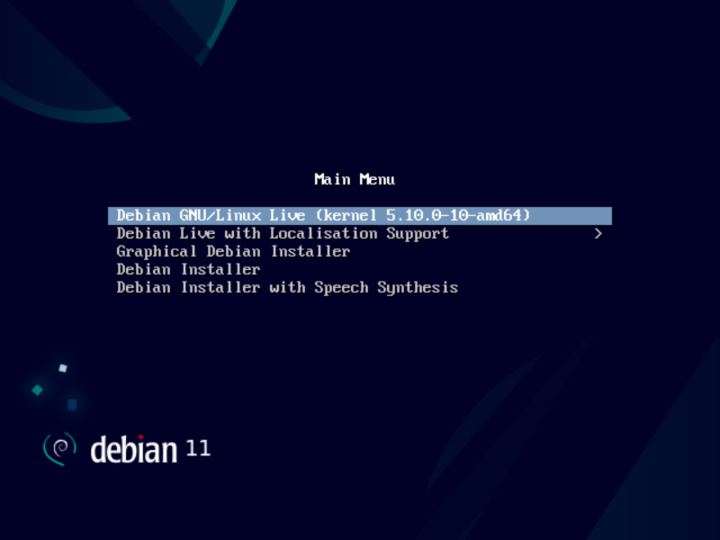

Now start any VNC client of your choice on your workstation and connect to localhost. You should now see the Debian Live CD's boot menu screen inside your VNC client's window.

From the boot menu select "Debian GNU/Linux Live". You should now see the following prompt:

user@debian:/home/user#

Which tells you that you are in a shell with a regular user named "user". Let's get super power (inside that Live CD VM):

sudo bash

Now mount the target installation directory created on host via 9p pass-through filesystem inside guest.

mkdir /mnt/inst mount -t 9p -o trans=virtio fs0 /mnt/inst -oversion=9p2000.L,posixacl,msize=5000000,cache=mmap

Next we need to get the debootstrap tool. Note: at this point you might be tempted to ping some host to check whether Internet connection is working inside the booted Live CD VM. This will not work (pinging), but the Internet connection should already be working nevertheless. That's because we were omitting any network configuration arguments with the QEMU command above, in which case QEMU defaults to SLiRP user networking where ICMP is not working (see Documentation/Networking#Network_Basics).

apt update apt install debootstrap

If you are using something like btrfs or ZFS for the installation directory and already subdivided the installation directory with some emtpy directories, you should now fix the permissions the guest system sees (i.e. guest should think it has root permissions on everything, even though the actual filesystem directories on host are probably owned by another user on host).

chown -R root:root /mn/inst

Now download and install a "minimal" Debian 11 ("Bullseye") system into the target directory.

debootstrap bullseye /mnt/inst https://deb.debian.org/debian/

Note: you might see some warnings like:

FS-Cache: Duplicate cookie detected

Ignore those warnings. The debootstrap process might take quite some time, so now would be a good time for a coffee break. Once debootstrap is done, you should see the following final message:

I: Basesystem installed successfully.

Now you have a minimal system installation. But it is so minimal that you won't be able to do much with it. So it is not yet the basic system that you would have after completing the standard Debian installer.

So let's chroot into the minimal system that we have so far, to be able to install the missing packages.

mount -o bind /proc /mnt/inst/proc mount -o bind /dev /mnt/inst/dev mount -o bind /dev/pts /mnt/inst/dev/pts mount -o bind /sys /mnt/inst/sys chroot /mnt/inst /bin/bash

Important: now we need to mount a tmpfs on /tmp (inside the chroot environment that we are in now).

mount -t tmpfs -o noatime,size=500M tmpfs /tmp

If you omit the previous step, you will most likely get error messages like the following with the subsequent apt commands below:

E: Unable to determine file size for fd 7 - fstat (2: No such file or directory)

Let's install the next fundamental packages. At this point you might get some locale warnings yet. Ignore them.

apt update apt install console-data console-common tzdata locales keyboard-configuration

We need a kernel to boot from. Let's use Bullseye's standard Linux kernel.

apt install linux-image-amd64

Select the time zone for the VM.

dpkg-reconfigure tzdata

Configure and generate locales.

dpkg-reconfigure locales

In the first dialog select at least "en_US.UTF-8", then "Next", then in the subsequent dialog select "C.UTF-8" and finish the dialog.

The basic installation that you might be used to after running the regular Debian installer is called the "standard" installation. Let's install the missing "standard" packages. For this we are using the tasksel tool. It should already be installed, if it is not then install it now.

apt install tasksel

The following simple command should usually be sufficient to install all missing packages for a Debian "standard" installation automatically.

tasksel install standard

For some people however the tasksel command above does not work (it would hang with output "100%"). If you are encountering that issue, then use the following workaround by using tasksel to just dump the list of packages to be installed and then manually install the packages via apt by passing those package names as arguments to apt.

tasksel --task-packages standard apt install ...

Before being able to boot from the installation directory, we need to adjust the initramfs to contain the 9p drivers, remember we will run 9p as root filesystem, so 9p drivers are required before the actual system is starting.

cd /etc/initramfs-tools echo 9p >> modules echo 9pnet >> modules echo 9pnet_virtio >> modules update-initramfs -u

The previous update-initramfs might take some time. Once it is done, check that we really have the three 9p kernel drivers inside the generated initramfs now.

lsinitramfs /boot/initrd.img-5.10.0-10-amd64 | grep 9p

Let's set the root password for the installed Debian system.

passwd

We probably want Internet connectivity on the installed Debian system. Let's keep it simple here and just configure DHCP for it to automatically acquire IP address, gateway/router IP and DNS servers.

printf 'allow-hotplug ens3\niface ens3 inet dhcp\n' > /etc/network/interfaces.d/ens3

Important: Finally we setup a tmpfs (permanently) on /tmp for the installed Debian system, similar to what we already did (temporarily) above for the Live CD VM that we are currently still runing. There are various ways to configure that permanently for the installed system. In this case we are using the systemd approach to configure it.

cp /usr/share/systemd/tmp.mount /etc/systemd/system/ systemctl enable tmp.mount

Alternatively you could of course also configure it by adding an entry to /etc/fstab instead, e.g. something like:

echo 'tmpfs /tmp tmpfs rw,nosuid,nodev,size=524288k,nr_inodes=204800 0 0' >> /etc/fstab

Yet another alternative would be to configure mounting tmpfs from host side (~/vm/bullseye/tmp).

This tmpfs on /tmp is currently required to avoid issues with use-after-unlink patterns, which in practice however only happen for files below /tmp. At least I have not encountered any software so far that used this pattern at locations other than /tmp.

Installation is now complete, so let's leave the chroot environment.

exit

And shutdown the Live CD VM at this point.

sync shutdown -h now

You can close the VNC client at this point and also close the VNC SSH tunnel (if you had one), we no longer need them. Finally hit Ctrl-C to quit QEMU that is still running the remainders of the Live CD VM.

Boot the 9p Root FS System

The standard basic installation is now complete.

Run this command from host to boot the fresh installed Debian 11 ("Bullseye") system with 9p being guest's root filesystem:

/usr/bin/qemu-system-x86_64 \ -machine pc,accel=kvm,usb=off,dump-guest-core=off -m 2048 \ -smp 4,sockets=4,cores=1,threads=1 -rtc base=utc \ -boot strict=on -kernel ~/vm/bullseye/boot/vmlinuz-5.10.0-10-amd64 \ -initrd ~/vm/bullseye/boot/initrd.img-5.10.0-10-amd64 \ -append 'root=fsRoot rw rootfstype=9p rootflags=trans=virtio,version=9p2000.L,msize=5000000,cache=mmap,posixacl console=ttyS0' \ -fsdev local,security_model=mapped,multidevs=remap,id=fsdev-fsRoot,path=$HOME/vm/bullseye/ \ -device virtio-9p-pci,id=fsRoot,fsdev=fsdev-fsRoot,mount_tag=fsRoot \ -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny \ -nographic

Note: you need to use at least cache=mmap with the command above. That's actually not about performance, but rather allows the mmap() call to work on the guest system at all. Without this the guest system would even fail to boot, as many software components rely on the availability of the mmap() call.

To speedup things you can also consider to use e.g. cache=loose instead. That will deploy a filesystem cache on guest side and reduces the amount of 9p requests to hosts. As a consequence however guest might not see file changes performed on host side at all (as Linux kernel's 9p client currently does not revalidate for fs changes on host side at all, which is planned to be changed on Linux kernel side soon though). So choose wisely upon intended use case scenario. You can change between cache=mmap or e.g. cache=loose at any time.

Another aspect to consider is the performance impact of the msize argument (see Documentation/9psetup#msize for details).

Finally you would login as user root to the booted guest and install any other packages that you need, like a webserver, SMTP server, etc.

apt update apt search ... apt install ...

That's it!

Questions and Feedback

Refer to Documentation/9p#Contribute for patches, issues etc.